Open-Channel Solid State Drives(OC-SSD) ,和一般SSD一樣透過邏輯位址空間操作實際快閃記憶體,但是包含部分原本實作在SSD 韌體層的特性。

一般的SSD是由 flash translation layer(FTL),bad block management,硬體元件: 集合了flash controller, host interface controller and flash chips 所組成。

然而,OC-SSD 提出把部分或是全部原本屬於FTL責任歸屬的項目移到Host system,也就是host system被允許管理諸如data placement, garbage collection and parallelism等行為;或者是留下可以增加效能的特性在SSD內。例如: bad block management,只實作相較一般SSD來說更輕量的FTL執行atomic IOs, metadata management等等。

Linux 系統的OC-SSD 系統架構圖如下所示,包括整合設備驅動程式,數個區塊管理者(block manager)以及數個目標(Target),這些目標可能是客製的或者是大家都有的特性以及一個核心(Core)。

為了要初始OC-SSD,我們需要儲存通訊協定例如:NVMe,所以在這樣的系統下會有共同的結構以及客製的指令集。如此一來,host就可以直接處理I/O去控制實體快閃記憶體,或是擴展、客製目標SSD有開放的特性。

區塊管理者(block manager) 負責管理設備上的快閃記憶體區塊。 會因為不同廠牌的快閃記憶體本身或是SSD製造商所擴展的特性而有所不同。 例如: OC-SSD需要去管理metadata 檔案系統;混合HDD及SSD的產品需要實作區塊管理,讓區塊管理者能夠從硬碟讀取區塊管理資訊。

OC-SSD架構下的目標(Target)需要在軟體層實作諸如: 記憶體轉換邏輯、資料該如何儲存在快閃記憶體以及設計Garbage collection(GC)等機制,因此可以擁有設計位址空間的彈性。例如: 以區塊、鍵值或是以物件來儲存管理。 OC-SSD劃分了區塊管理、資料儲存方式以及Garbage collection處理機制到host system上,硬體製造商就可以製造更通用的基礎或是說更簡單的產品讓OC-SSD架構下的目標(Target)去相容市場上的各種產品以及讓它去負責創造單一位址空間滿足全部此架構下的硬碟。

最後OC-SSD架構下有一個核心(Core),它作為設備驅動程式,區塊管理者以及目標之間的媒介。

核心負責實作共享於各個目標(Target)的功能。例如: 初始化、卸載或是效能計數器。

ref: http://openchannelssd.readthedocs.org/en/latest/

心得: 一個產品是否要把FTL原先負責的部分切到host端去實作,其實我覺得在技術面上不管是要在host還是device作都可行,既然一個產品 == 商品就得考慮到市場接受度,市場接受度來自於品牌、性價比,撇開品牌先不討論;就性價比討論,價錢來自於產品的開發生產成本,我們要思考的是這樣的架構是否會影響既有的產業鏈生態,廠商是否願意推動這樣的產品。 性能(效能)的部分,我們必須先思考既有的產品效能瓶頸在哪? 這樣的架構是否是解決此瓶頸的方法或是換成Open-Channel Solid State Drives(OC-SSD)的架構是否能創造出一種次世代的產品,直接取代了原先架構下的瓶頸點。 此篇提出了不同於 bache file system紀錄 對於快閃記憶體生態鏈的新思維。一直有各種對於資料儲存的研究,可見得對於"紀錄"一直是人類歷史上不可或缺的一塊。

FunnyMagic&Software

2015年9月6日 星期日

2015年8月26日 星期三

bcache file system 紀錄

今天又逛了一下linux maillist 發現了一篇還蠻有趣的發言,筆記一下看看未來的發展。

是一篇關於一個新的檔案系統,可能是作者(曾經在Google服務過)發的文。

內容提到bcachefs就他文中描述,bcahefs 的效能以及可靠度可以跟ext4, xfs 相提並論又有btrfs/zfs的特性,之後有時間應該要去了解一下這個檔案系統。

回到主題

bcachefs is a modern COW(copy-on-write?) filesystem with checksumming, compression, multiple devices, caching,

and eventually snapshots and all kinds of other nifty features.

再來直接看看發文者對bcachefs的特性描述如下

FEATURES:

- multiple devices

(replication is like 80% done, but the recovery code still needs to be

finished).

- caching/tiering (naturally)

you can format multiple devices at the same time with bcacheadm, and assign

them to different tiers - right now only two tiers are supported, tier 0

(default) is the fast tier and tier 1 is the slow tier. It'll effectively do

writeback caching between tiers.

- checksumming, compression: currently only zlib is supported for compression,

and for checksumming there's crc32c and a 64 bit checksum. There's mount

options for them:

# mount -o data_checksum=crc32c,

Caveat: don't try to use tiering and checksumming or compression at the same

time yet, the read path needs to be reworked to handle both at the same time.

PLANNED FEATURES:

- snapshots (might start on this soon)

- erasure coding

- native support for SMR drives, raw flash

發文者對此檔案系統也作了一些效能測試,使用的是dbench,高端的pcie flash device

Here's some dbench numbers, running on a high end pcie flash device:

1 thread, O_SYNC: Throughput Max latency

bcache: 225.812 MB/sec 18.103 ms

ext4: 454.546 MB/sec 6.288 ms

xfs: 268.81 MB/sec 1.094 ms

btrfs: 271.065 MB/sec 74.266 ms

20 threads, O_SYNC: Throughput Max latency

bcache: 1050.03 MB/sec 6.614 ms

ext4: 2867.16 MB/sec 4.128 ms

xfs: 3051.55 MB/sec 10.004 ms

btrfs: 665.995 MB/sec 1640.045 ms

60 threads, O_SYNC: Throughput Max latency

bcache: 2143.45 MB/sec 15.315 ms

ext4: 2944.02 MB/sec 9.547 ms

xfs: 2862.54 MB/sec 14.323 ms

btrfs: 501.248 MB/sec 8470.539 ms

1 thread: Throughput Max latency

bcache: 992.008 MB/sec 2.379 ms

ext4: 974.282 MB/sec 0.527 ms

xfs: 715.219 MB/sec 0.527 ms

btrfs: 647.825 MB/sec 108.983 ms

20 threads: Throughput Max latency

bcache: 3270.8 MB/sec 16.075 ms

ext4: 4879.15 MB/sec 11.098 ms

xfs: 4904.26 MB/sec 20.290 ms

btrfs: 647.232 MB/sec 2679.483 ms

60 threads: Throughput Max latency

bcache: 4644.24 MB/sec 130.980 ms

ext4: 4405.16 MB/sec 69.741 ms

xfs: 4413.93 MB/sec 131.194 ms

btrfs: 803.926 MB/sec 12367.850 ms

文中提到幾項自己有興趣的部分,

"Where'd that 20% of my space go?" - you'll notice the capacity shown by df is

lower than what it should be. Allocation in bcachefs (like in upstream bcache)

is done in terms of buckets, with copygc required if no buckets are empty, hence

we need copygc and a copygc reserve (much like the way SSD FTLs work).

It's quite conceivable at some point we'll add another allocator that doesn't

work in terms of buckets and doesn't require copygc (possibly for rotating

disks), but for a COW filesystem there are real advantages to doing it this way.

So for now just be aware - and the 20% reserve is probably excessive, at some

point I'll add a way to change it.

意思是使用者會發現容量被吃了!? 這對儲存業者來說好像蠻重視的??

一般使用者最敏感的也就是自己花的錢買到多少容量XD

作者提到目前的20%保留區,未來可能會提供一個方法去修改保留區的大小!?

Mount times:

bcachefs is partially garbage collection based - we don't persist allocation

information. We no longer require doing mark and sweep at runtime to reclaim

space, but we do have to walk the extents btree when mounting to find out what's

free and what isn't.

(We do retain the ability to do a mark and sweep while the filesystem is in use

though - i.e. we have the ability to do a large chunk of what fsck does at

runtime).

關於這邊的特性,提到bachefs對於可利用的空間不需要再執行時,使用mark以及sweep來作,而是在mount時藉由搜尋btree來得知。

底下有個應該是在HGST服務的工程師詢問他的問題,

How do you imagine SMR drives support? How do you feel about libzbc

using for SMR drives support? I am not very familiar with bcachefs

architecture yet. But I suppose that maybe libzbc model can be useful

for SMR drives support on bcachefs side. Anyway, it makes sense to

discuss proper model.

另一個引起我興趣的問題是

How do you imagine raw flash support in bcachefs architecture? Frankly

speaking, I am implementing NAND flash oriented file system. But this

project is proprietary yet and I can't share any details. However,

currently, I've implemented NAND flash related approaches in my file

system only. So, maybe, it make sense to consider some joint variant of

bcachefs and implementation on my side for NAND flash support. I need to

be more familiar with bcachefs architecture for such decision. But,

unfortunately, I suspect that it can be not so easy to support raw flash

for bcachefs. Of course, I can be wrong.

提到他正在實作NAND flash oriented file system從他詢問的方式來看應該再開發一種不需要FLASH controller的檔案系統,其實我也一直在思考何時會不需要FLASH controller?? 撇開最近剛發表很紅的XPOINT不說。未來產品是否會回歸直接搭載raw flash? 產品效能的瓶頸在哪?

是一篇關於一個新的檔案系統,可能是作者(曾經在Google服務過)發的文。

內容提到bcachefs就他文中描述,bcahefs 的效能以及可靠度可以跟ext4, xfs 相提並論又有btrfs/zfs的特性,之後有時間應該要去了解一下這個檔案系統。

回到主題

bcachefs is a modern COW(copy-on-write?) filesystem with checksumming, compression, multiple devices, caching,

and eventually snapshots and all kinds of other nifty features.

再來直接看看發文者對bcachefs的特性描述如下

FEATURES:

- multiple devices

(replication is like 80% done, but the recovery code still needs to be

finished).

- caching/tiering (naturally)

you can format multiple devices at the same time with bcacheadm, and assign

them to different tiers - right now only two tiers are supported, tier 0

(default) is the fast tier and tier 1 is the slow tier. It'll effectively do

writeback caching between tiers.

- checksumming, compression: currently only zlib is supported for compression,

and for checksumming there's crc32c and a 64 bit checksum. There's mount

options for them:

# mount -o data_checksum=crc32c,

Caveat: don't try to use tiering and checksumming or compression at the same

time yet, the read path needs to be reworked to handle both at the same time.

PLANNED FEATURES:

- snapshots (might start on this soon)

- erasure coding

- native support for SMR drives, raw flash

發文者對此檔案系統也作了一些效能測試,使用的是dbench,高端的pcie flash device

Here's some dbench numbers, running on a high end pcie flash device:

1 thread, O_SYNC: Throughput Max latency

bcache: 225.812 MB/sec 18.103 ms

ext4: 454.546 MB/sec 6.288 ms

xfs: 268.81 MB/sec 1.094 ms

btrfs: 271.065 MB/sec 74.266 ms

20 threads, O_SYNC: Throughput Max latency

bcache: 1050.03 MB/sec 6.614 ms

ext4: 2867.16 MB/sec 4.128 ms

xfs: 3051.55 MB/sec 10.004 ms

btrfs: 665.995 MB/sec 1640.045 ms

60 threads, O_SYNC: Throughput Max latency

bcache: 2143.45 MB/sec 15.315 ms

ext4: 2944.02 MB/sec 9.547 ms

xfs: 2862.54 MB/sec 14.323 ms

btrfs: 501.248 MB/sec 8470.539 ms

1 thread: Throughput Max latency

bcache: 992.008 MB/sec 2.379 ms

ext4: 974.282 MB/sec 0.527 ms

xfs: 715.219 MB/sec 0.527 ms

btrfs: 647.825 MB/sec 108.983 ms

20 threads: Throughput Max latency

bcache: 3270.8 MB/sec 16.075 ms

ext4: 4879.15 MB/sec 11.098 ms

xfs: 4904.26 MB/sec 20.290 ms

btrfs: 647.232 MB/sec 2679.483 ms

60 threads: Throughput Max latency

bcache: 4644.24 MB/sec 130.980 ms

ext4: 4405.16 MB/sec 69.741 ms

xfs: 4413.93 MB/sec 131.194 ms

btrfs: 803.926 MB/sec 12367.850 ms

文中提到幾項自己有興趣的部分,

"Where'd that 20% of my space go?" - you'll notice the capacity shown by df is

lower than what it should be. Allocation in bcachefs (like in upstream bcache)

is done in terms of buckets, with copygc required if no buckets are empty, hence

we need copygc and a copygc reserve (much like the way SSD FTLs work).

It's quite conceivable at some point we'll add another allocator that doesn't

work in terms of buckets and doesn't require copygc (possibly for rotating

disks), but for a COW filesystem there are real advantages to doing it this way.

So for now just be aware - and the 20% reserve is probably excessive, at some

point I'll add a way to change it.

意思是使用者會發現容量被吃了!? 這對儲存業者來說好像蠻重視的??

一般使用者最敏感的也就是自己花的錢買到多少容量XD

作者提到目前的20%保留區,未來可能會提供一個方法去修改保留區的大小!?

Mount times:

bcachefs is partially garbage collection based - we don't persist allocation

information. We no longer require doing mark and sweep at runtime to reclaim

space, but we do have to walk the extents btree when mounting to find out what's

free and what isn't.

(We do retain the ability to do a mark and sweep while the filesystem is in use

though - i.e. we have the ability to do a large chunk of what fsck does at

runtime).

關於這邊的特性,提到bachefs對於可利用的空間不需要再執行時,使用mark以及sweep來作,而是在mount時藉由搜尋btree來得知。

底下有個應該是在HGST服務的工程師詢問他的問題,

How do you imagine SMR drives support? How do you feel about libzbc

using for SMR drives support? I am not very familiar with bcachefs

architecture yet. But I suppose that maybe libzbc model can be useful

for SMR drives support on bcachefs side. Anyway, it makes sense to

discuss proper model.

另一個引起我興趣的問題是

How do you imagine raw flash support in bcachefs architecture? Frankly

speaking, I am implementing NAND flash oriented file system. But this

project is proprietary yet and I can't share any details. However,

currently, I've implemented NAND flash related approaches in my file

system only. So, maybe, it make sense to consider some joint variant of

bcachefs and implementation on my side for NAND flash support. I need to

be more familiar with bcachefs architecture for such decision. But,

unfortunately, I suspect that it can be not so easy to support raw flash

for bcachefs. Of course, I can be wrong.

提到他正在實作NAND flash oriented file system從他詢問的方式來看應該再開發一種不需要FLASH controller的檔案系統,其實我也一直在思考何時會不需要FLASH controller?? 撇開最近剛發表很紅的XPOINT不說。未來產品是否會回歸直接搭載raw flash? 產品效能的瓶頸在哪?

2015年6月8日 星期一

[HOW TO]: use debugfs functions in kernel module

use module [mmc_block_test] for

example

because the mmc_block_test

already use debugfs, you will see debugfs_create_dir and

debugfs_create_file in the soucrec code. When the module be

installed or be triggered, the debugfs will create a

directory(default in /sys/kernel/debug/) for it.

By using debugfs, we wouldn't need to

write a user application program for testing module functions.

Actual Operation on

DragonBoard

Step1. See current io-scheduler

#cat

/sys/block/mmcblk0/queue/schduler

Step2. Switch io-scheduler and the

relative functions will be mounted in debugfs

#echo test-iosched >

/sys/block/mmcblk0/queue/schduler

Step3. Move to functions folder, in

there we will see /tests/ and /utils/

#cd /sys/kernel/debug/test-iosched/

Step4. Linux use echo command to

trigger .write, and using cat command to trigger .read

in terminal

for example,

#echo 4 >

write_packing_control_test will do 4 cycle of

write_packing_control_test_write

#cat write_packing_control_test

will show what write_packing_control_test_write will do

ref:

Documentation/filesystems/debugfs.txt

2015年4月11日 星期六

Program "make" not found in PATH

Today, I copied a native project from my Linux PC and I need to continue developing on Windows PC. Problem is after import project to eclipse an error showed: Program "make" not found in PATH

This post is record for myself to solve this problem when I need to setup another Windows computer for the building environment.

My solution:

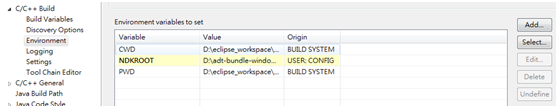

1. Modify the Build command: make in Property -> C/C++ Build to ${NDKROOT}/ndk-build.cmd

the Value is the location where your NDK library.

In my case is D:\adt-bundle-windows-x86_64-20140702\android-ndk-r9d

That's all. Enjoy your code~

2015年2月6日 星期五

Fatal signal 11(SIGSEGV) at 0xDEADBAAD(code=1)

Recent days, I develop an android app which need to use an open-source native library.

After running app on the target smart phone, some errors always happen when access the native code..

such as,

ABORTING: LIBC: ARGUMENT IS INVALID HEAP ADDRESS IN dlfree addr=0x769c6df8

Fatal signal 11(SIGSEGV) at 0xDEADBAAD(code=1), thread

or

ABORTING: LIBC: ARGUMENT IS INVALID HEAP MEMORY CORRUPTION IN dlmalloc

After searched some related information from StackOverflow and google..

Below is some possible reasons which direct me to the correct way for debug.

1. A function call being made from two different threads at the same time.

2. It is related to device's memory error. (Such as no malloc and call free in native code.)

3. About the 0xDEADBAAD error message. 0xDEADBAAD is used by the Android libc abort() frunction when native heap corruption is detected.

In my case, following above hints, finally I found the root cause is memory align issue.

Terrible days...

After running app on the target smart phone, some errors always happen when access the native code..

such as,

ABORTING: LIBC: ARGUMENT IS INVALID HEAP ADDRESS IN dlfree addr=0x769c6df8

Fatal signal 11(SIGSEGV) at 0xDEADBAAD(code=1), thread

or

ABORTING: LIBC: ARGUMENT IS INVALID HEAP MEMORY CORRUPTION IN dlmalloc

After searched some related information from StackOverflow and google..

Below is some possible reasons which direct me to the correct way for debug.

1. A function call being made from two different threads at the same time.

2. It is related to device's memory error. (Such as no malloc and call free in native code.)

3. About the 0xDEADBAAD error message. 0xDEADBAAD is used by the Android libc abort() frunction when native heap corruption is detected.

In my case, following above hints, finally I found the root cause is memory align issue.

Terrible days...

[Linux] find& grep

When trace the source code of a new project which from a customer or the online opensource, we often need "find" some file name or "grep" content in a file.

Below is the instructions to achieve above behavior.

$find . -name "*.i" -print

means find all the file name end with ".i" from current folder and all folders under current folder..

$grep -rn "test" .

means find text "test" in current folder and all folders under current folder.

Below is the instructions to achieve above behavior.

$find . -name "*.i" -print

means find all the file name end with ".i" from current folder and all folders under current folder..

$grep -rn "test" .

means find text "test" in current folder and all folders under current folder.

2014年7月30日 星期三

The feature of runtime pm in mmc_get_card& mmc_put_card

今天在porting sandisk 開發的flow,發現有兩個function是目前使用的kernel沒有定義的。

mmc_get_card 以及 mmc_put_card。

google了一下其實就是把原本mmc_claim_host 以及mmc_release_host加上runtime pm相關的function包起來而成。

[PATCH V3 3/4] mmc: block: Enable runtime pm for mmc blkdevice

從這版patch中可以看到mmc_get_card 以及 mmc_put_card的定義。也取代了原本的mmc_claim_host 以及mmc_release_host。

int pm_runtime_get_sync(struct device *dev);

int pm_request_autosuspend(struct device *dev);

- schedule the execution of the subsystem-level suspend callback for the

device when the autosuspend delay has expired; if the delay has already

expired then the work item is queued up immediately

If the default initial runtime PM status of the device (i.e. 'suspended')

reflects the actual state of the device, its bus type's or its driver's

->probe() callback will likely need to wake it up using one of the PM core's

helper functions described in Section 4. In that case, pm_runtime_resume()

should be used. Of course, for this purpose the device's runtime PM has to be

enabled earlier by calling pm_runtime_enable().

再來文件中提到下面這段,也就理解了為何在mmc_put_card呼叫這兩個function。

Inactivity is determined based on the power.last_busy field. Drivers should

call pm_runtime_mark_last_busy() to update this field after carrying out I/O,

typically just before calling pm_runtime_put_autosuspend().

mmc_get_card 以及 mmc_put_card。

google了一下其實就是把原本mmc_claim_host 以及mmc_release_host加上runtime pm相關的function包起來而成。

[PATCH V3 3/4] mmc: block: Enable runtime pm for mmc blkdevice

從這版patch中可以看到mmc_get_card 以及 mmc_put_card的定義。也取代了原本的mmc_claim_host 以及mmc_release_host。

void mmc_get_card(struct mmc_card *card)

{

pm_runtime_get_sync(&card->dev);

mmc_claim_host(card->host);

}

EXPORT_SYMBOL(mmc_get_card);

/*

* This is a helper function, which releases the host and drops the runtime

* pm reference for the card device.

*/

void mmc_put_card(struct mmc_card *card)

{

mmc_release_host(card->host);

pm_runtime_mark_last_busy(&card->dev);

pm_runtime_put_autosuspend(&card->dev);

}

EXPORT_SYMBOL(mmc_put_card);

從Documentation/power/runtime_pm.txt可以找到這幾個相關function的簡單敘述。

int pm_runtime_get_sync(struct device *dev);

- increment the device's usage counter, run

pm_runtime_resume(dev) and return its result

int pm_runtime_resume(struct device *dev);

- execute the subsystem-level resume callback for the device;

returns 0 on success, 1 if the device's runtime PM status was already 'active'

or error code on failure, where -EAGAIN means it may be safe to attempt to

resume the device again in future, but 'power.runtime_error' should be checked

additionally, and -EACCES means that 'power.disable_depth' is different from 0

void pm_runtime_mark_last_busy(struct device *dev);

- set the power.last_busy field to the current time

int pm_runtime_put_autosuspend(struct device *dev);

- decrement the device's usage counter; if the result is 0 then run

pm_request_autosuspend(dev) and return its result

- set the power.last_busy field to the current time

int pm_runtime_put_autosuspend(struct device *dev);

- decrement the device's usage counter; if the result is 0 then run

pm_request_autosuspend(dev) and return its result

int pm_request_autosuspend(struct device *dev);

- schedule the execution of the subsystem-level suspend callback for the

device when the autosuspend delay has expired; if the delay has already

expired then the work item is queued up immediately

在文件中有下面這麼一段,這邊想提到的是既然mmc_get_card會引用到pm_runtime_resume,根據文件應該在其他地方會先enable runtime pm.

reflects the actual state of the device, its bus type's or its driver's

->probe() callback will likely need to wake it up using one of the PM core's

helper functions described in Section 4. In that case, pm_runtime_resume()

should be used. Of course, for this purpose the device's runtime PM has to be

enabled earlier by calling pm_runtime_enable().

再來文件中提到下面這段,也就理解了為何在mmc_put_card呼叫這兩個function。

Inactivity is determined based on the power.last_busy field. Drivers should

call pm_runtime_mark_last_busy() to update this field after carrying out I/O,

typically just before calling pm_runtime_put_autosuspend().

訂閱:

文章 (Atom)